4 - Monitor your model

Deploy and maintain models with vetiver

Plan for this workshop

- Versioning

- Managing change in models ✅

- Deploying

- Putting models in REST APIs 🎯

- Monitoring

- Tracking model performance 👀

Data for model development

Data that you use while building a model for training/testing

R

Python

Data for model monitoring

New data that you predict on after your model deployed

R

Python

Data for model monitoring

My model is performing well!

👩🏼🔧 My model returns predictions quickly, doesn’t use too much memory or processing power, and doesn’t have outages.

Metrics

- latency

- memory and CPU usage

- uptime

My model is performing well!

👩🏽🔬 My model returns predictions that are close to the true values for the predicted quantity.

Metrics

- accuracy

- ROC AUC

- F1 score

- RMSE

- log loss

Model drift 📉

DATA drift

Model drift 📉

CONCEPT drift

When should you retrain your model? 🧐

Your turn 🏺

Activity

Using our Chicago data, what could be an example of data drift? Concept drift?

05:00

Learn more about model monitoring tomorrow 🚀

Come hear Julia’s talk on reliable maintenance of ML models on Tues, Sept 19 at 3pm in Grand Ballroom CD!

Monitor your model’s inputs

Monitor your model’s inputs

Typically it is most useful to compare to your model development data1

- Statistical distribution of features individually

- Statistical characteristics of features as a whole

- Applicability scores: https://applicable.tidymodels.org/

Monitor your model’s inputs

Monitor your model’s inputs

Your turn 🏺

Activity

Create a plot or table comparing the development vs. monitoring distributions of a model input/feature.

How might you make this comparison if you didn’t have all the model development data available when monitoring?

What summary statistics might you record during model development, to prepare for monitoring?

07:00

Monitor your model’s outputs

Monitor your model’s outputs

- In some ML systems, you eventually get a “real” result after making a prediction

- If the Chicago of Public Health used a model like this one, they would get:

- A prediction from their model

- A real inspection result after they did their inspection

- In this case, we can monitor our model’s statistical performance

- If you don’t ever get a “real” result, you can still monitor the distribution of your outputs

Monitor your model’s outputs

Python

from vetiver import vetiver_endpoint, predict, compute_metrics, plot_metrics

from sklearn.metrics import recall_score, accuracy_score

from datetime import timedelta

inspections_new['inspection_date'] = pd.to_datetime(inspections_new['inspection_date'])

inspections_new['month'] = inspections_new['inspection_date'].dt.month

inspections_new['year'] = inspections_new['inspection_date'].dt.year

url = "https://colorado.posit.co/rsc/chicago-inspections-python/predict"

endpoint = vetiver_endpoint(url)

inspections_new["preds"] = predict(endpoint = url, data = inspections_new.drop(columns = ["results", "aka_name", "inspection_date"]))

inspections_new["preds"] = inspections_new["preds"].map({"PASS": 0, "FAIL": 1})

inspections_new["results"] = inspections_new["results"].map({"PASS": 0, "FAIL": 1})

td = timedelta(weeks = 4)

metric_set = [accuracy_score, recall_score]

m = compute_metrics(

data = inspections_new,

date_var = "inspection_date",

period = td,

metric_set = metric_set,

truth = "results",

estimate = "preds"

)

metrics_plot = plot_metrics(m)Monitor your model’s outputs

Monitor your model’s outputs

R

library(vetiver)

library(tidymodels)

url <- "https://colorado.posit.co/rsc/chicago-inspections-rstats/predict"

endpoint <- vetiver_endpoint(url)

augment(endpoint, new_data = inspections_new) |>

mutate(.pred_class = as.factor(.pred_class)) |>

vetiver_compute_metrics(

inspection_date,

"month",

results,

.pred_class,

metric_set = metric_set(accuracy, sensitivity)

) |>

vetiver_plot_metrics()Monitor your model’s outputs

Your turn 🏺

Activity

Use the functions for metrics monitoring from vetiver to create a monitoring visualization.

Choose a different set of metrics or time aggregation.

Note that there are functions for using pins as a way to version and update monitoring results too!

05:00

Feedback loops 🔁

Deployment of an ML model may alter the training data

- Movie recommendation systems on Netflix, Disney+, Hulu, etc

- Identifying fraudulent credit card transactions at Stripe

- Recidivism models

Feedback loops can have unexpected consequences

Feedback loops 🔁

- Users take some action as a result of a prediction

- Users rate or correct the quality of a prediction

- Produce annotations (crowdsource or expert)

- Produce feedback automatically

Your turn 🏺

Activity

What is a possible feedback loop for the Chicago inspections data?

Do you think your example would be harmful or helpful?

05:00

ML metrics ➡️ organizational outcomes

- Are machine learning metrics like F1 score or accuracy what matter to your organization?

- Consider how ML metrics are related to important outcomes or KPIs for your business or org

- There isn’t always a 1-to-1 mapping 😔

- You can partner with stakeholders to monitor what’s truly important about your model

Your turn 🏺

Activity

Let’s say that the most important organizational outcome for the Chicago Department of Public Health is what proportion of failed inspections were predicted to fail.

If “fail” is the event we are predicting, this is the sensitivity or recall.

Compute this quantity with the monitoring data, and aggregate by week/month or facility type.

For extra credit, make a visualization showing your results.

07:00

ML metrics ➡️ organizational outcomes

augment(endpoint, inspections_new) |>

mutate(.pred_class = as.factor(.pred_class)) |>

group_by(facility_type) |>

sensitivity(results, .pred_class)

#> # A tibble: 3 × 4

#> facility_type .metric .estimator .estimate

#> <fct> <chr> <chr> <dbl>

#> 1 BAKERY sensitivity binary 0

#> 2 GROCERY STORE sensitivity binary 0.0110

#> 3 RESTAURANT sensitivity binary 0.0151by_facility_type = pd.DataFrame()

for metric in metric_set:

by_facility_type[metric.__qualname__] = inspections_new.groupby("facility_type")\

.apply(lambda x: metric(y_pred=x["preds"], y_true=x["results"]))

by_facility_type

#> accuracy_score recall_score

#> facility_type

#> BAKERY 0.718310 0.071429

#> GROCERY STORE 0.674797 0.164835

#> RESTAURANT 0.654560 0.244556What is going on with these inspections?! 😳

Possible model monitoring artifacts

- Adhoc analysis that you post in Slack

- Report that you share in Google Drive

- Fully automated dashboard published to Posit Connect

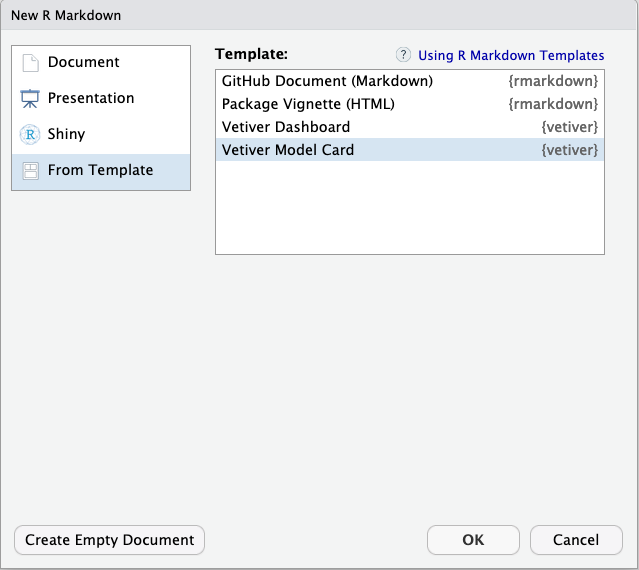

Possible model monitoring artifacts

Your turn 🏺

Activity

Create a Quarto report or R Markdown dashboard for model monitoring.

Keep in mind that we are seeing some real ✨problems✨ with this model we trained.

Publish your document to Connect.

15:00

We made it! 🎉

Your turn 🏺

Activity

What is one thing you learned that surprised you?

What is one thing you learned that you plan to use?

05:00

Resources to keep learning

Documentation at https://vetiver.rstudio.com/

Isabel’s talk from rstudio::conf() 2022 on Demystifying MLOps

End-to-end demos from Posit Solution Engineering in R and Python

Are you on the right track with your MLOps system? Use the rubric in “The ML Test Score: A Rubric for ML Production Readiness and Technical Debt Reduction” by Breck et al (2017)

Want to learn about how MLOps is being practiced? Read one of our favorite 😍 papers of the last year, “Operationalizing Machine Learning: An Interview Study” by Shankar et al (20222)

Follow Posit and/or us on your preferred social media for updates!

Submit feedback before you leave 🗳️

Your feedback is crucial! Data from the survey informs curriculum and format decisions for future conf workshops and we really appreciate you taking the time to provide it.